Quick Links

To say I've been disappointed with the state of graphics cards since 2018 would be an understatement. Last year was particularly bad for GPUs and although my pessimistic predictions for this year haven't been totally correct, the industry still looks pretty dismal. With the release of Nvidia's RTX 4060 Ti and AMD's RX 7600, it's official: a gain in the performance-to-value ratio isn't a guarantee anymore. It's looking like the old paradigm — at least 20% more performance for the same price — is being replaced by a focus on features of dubious utility instead of value improvements.

That's bad news for anybody hoping for another RTX 30 or RX 6000 moment where everything got back on track. Nvidia and AMD are no longer PC gaming companies, and they don't need to compete in a market that ultimately doesn't make them much money anymore. This is the new normal for graphics cards, and we have little choice but to accept it.

Yes, things really have gotten worse

I think we all know the rate of improvement for graphics cards has come to a crawl (we saw it firsthand with 4060 Ti and 7600 reviews), but it's important to see how bad it actually is. I've created some tables that show the generational value improvements for high-end Nvidia GPUs, upper midrange Nvidia GPUs, and midrange AMD GPUs as they came out on launch day. So, the 780 is compared to the 680, the 980 to the 780, the 1080 to the 980, and so on.

An important part of this comparison is that it only considers actual retail pricing. For example, the 980 is compared to the 780 using the price of each card on launch day — $550 for the 980 and $500 for the 780. It's very difficult to use MSRP in this comparison because it basically didn't exist from 2020 to early 2022 due to supply chain issues, and this is a little more realistic anyway. I grabbed performance data at 1440p from TechPowerUp and used a variety of reviews from publications like Tom's Hardware and Techspot as well as CamelCamelCamel to reconstruct pricing data, but do keep in mind this is all estimated.

GTX 780 | GTX 980 | GTX 1080 | RTX 2080 | RTX 3080 10GB | RTX 4080 | |

|---|---|---|---|---|---|---|

Launch Day Price | $650 | $550 | $600 | $700 | $700* | $1200 |

Price When Replaced | $500 | $530 | $490 | $700* | $750 | — |

Performance Gain | 25% (vs. 680) | 30% | 65% | 35% | 50%* | 35% |

Value Gain | -10% | 20% | 45% | -5% | 50%* | -15% |

* Actual price and value gain is unknown due to GPU shortage of 2020-2022

For Nvidia's flagship cards, it's actually hard to see a trend here since half of these years are up and half of them are down, though I put an asterisk on the 3080 since it's rarely ever been available at MSRP. The 4080's improvement (or lack thereof) over the 3080 10GB is certainly the worst we've seen in at least a decade; the 4080 is so expensive and the 3080 hasn't sold at or below MSRP since 2020. However, flagship cards aren't usually priced very aggressively, so let's take a look at some upper midrange GPUs that tend to target better bang for the buck.

GTX 770 | GTX 970 | GTX 1070 | RTX 2060 | RTX 3060 Ti | RTX 4060 Ti 8GB | |

|---|---|---|---|---|---|---|

Launch Day Price | $400 | $330 | $380 | $350 | $400* | $400 |

Price When Replaced | $340 | $320 | $320 | $280* | $420 | — |

Performance Gain | 15% (vs. 670) | 40% | 60% | 15% | 40%* | 10% |

Value Gain | 10% | 45% | 35% | 5% | -20%* | 15% |

* Actual price and value gain is unknown due to GPU shortage of 2020-2022, uses RTX 2060 Super price and performance data

Here we have Nvidia's $300 to $400 cards, and again I have to point out that RTX 30 series pricing has been very inconsistent, so the RTX 3060 Ti column isn't exactly super reliable. But even after the GPU shortage cleared up early last year, the 3060 Ti was generally not available at $400 until the 4060 Ti launched. For most of 2022 and much of 2023, it retailed for $450 or more, and if you could buy a 2060 or 2060 Super at MSRP or less, the 3060 Ti would have been pretty mediocre by comparison. By extension, the 4060 Ti being better than a bad card isn't that impressive.

R9 380 2GB | RX 480 4GB | RX 5600 XT | RX 6600 | RX 7600 | |

|---|---|---|---|---|---|

Launch Day Price | $200 | $200 | $280 | $330** | $270 |

Price When Replaced | $160 | $210* | $220** | $220 | — |

Performance Gain | 5% (vs. 285) | 60% | 60%* | 10%** | 25% |

Value Gain | 5% | 25% | 20%* | 10%** | 0% |

* Uses RX 580 price and performance data

** Actual price and value gain is unknown due to the GPU shortage of 2020-2022

Finally, AMD's midrange cards are in the $200–$300 region. Again, it's hard to evaluate GPUs that came out during the shortage (like the RX 6600). You can also see that AMD's generational gains were very consistent from the 300 series to the 5000 series, whereas Nvidia's cards have much higher highs and much lower lows. The latest RX 7600 is 25% faster than the 6000, but also 25% more expensive, effectively making it an RX 6650 XT. It's not exactly moving the needle, to say the least.

The simple fact is that Nvidia and AMD have lost interest in gaming graphics cards.

So what do we make of all this data? Well, things were on track from 2014 to 2018, and 2018 to 2023 have been much worse by comparison. Performance gains are still happening, but prices are also going up, making those improvements somewhat pointless. Furthermore, these charts don't show how VRAM has changed from year to year, and while AMD has been adding VRAM over the generations, Nvidia definitely hasn't, which is another factor to consider.

Time is also an important consideration. Although each column is the same width, they don't represent the same amount of time. With the 700, 900, and 10 series, it took Nvidia an average of a year and a half to launch a new generation. But with the 20 series, that's gone up to about two years or more on average, which further diminishes value gains with each generation. AMD is arguably worse because their launches are less consistent and sometimes range from one to three years, not to mention all the rebrands in the mid to late 2010s, though at least those often came with price cuts.

Moore's Law makes it harder to make cheap GPUs

So Nvidia and AMD have pressed the brakes, but why? Both companies were in fierce competition with each other for two decades, and even when Nvidia was well in the lead, it gave us great GPUs like the 900 series and 10 series. It comes down to two major ways the industry has been evolving since roughly 2016, and these big changes have sidelined gaming graphics cards.

One of these factors is the death of Moore's Law. Every processor is made on a process node, and for decades, more advanced nodes meant higher frequencies, more transistor density, lower power consumption, and lower manufacturing costs. After the industry hit the 28nm node in 2011, it found that progressing to smaller nodes was becoming extremely difficult. TSMC's 16nm node took about four years to develop, and it prevented Nvidia and AMD from making 16nm GPUs when they originally planned (which is partly why the GTX 700 series and Radeon 300 series have poor value gains).

Nvidia and AMD have different approaches to dealing with how manufacturing isn't getting any cheaper. Nvidia insists that people should just buy more expensive GPUs because DLSS will make up for the performance, even though standard DLSS is only available in roughly 300 games and DLSS 3 frame generation is just in a few dozen (and also only increases visual framerate and causes latency to skyrocket). AMD uses chiplets so that it can use cutting-edge nodes for logic transistors like cores and cheap nodes for stuff like memory... while also charging higher prices.

Gaming isn't nearly as profitable as the data center

Moore's Law alone doesn't account for the lack of competition however, as both companies could simply drop their margins in an attempt to get a bigger slice of the pie, which is something Nvidia and AMD have done at times. The simple fact is that Nvidia and AMD have lost interest in gaming graphics cards. According to Jon Peddie Research's latest figures, graphics card sales have plummeted since mid-2022 and are the lowest they've been in the past 20 years. This brings up the question: How are these two companies making money if they're not selling graphics cards? Well, they are selling GPUs, but not to users.

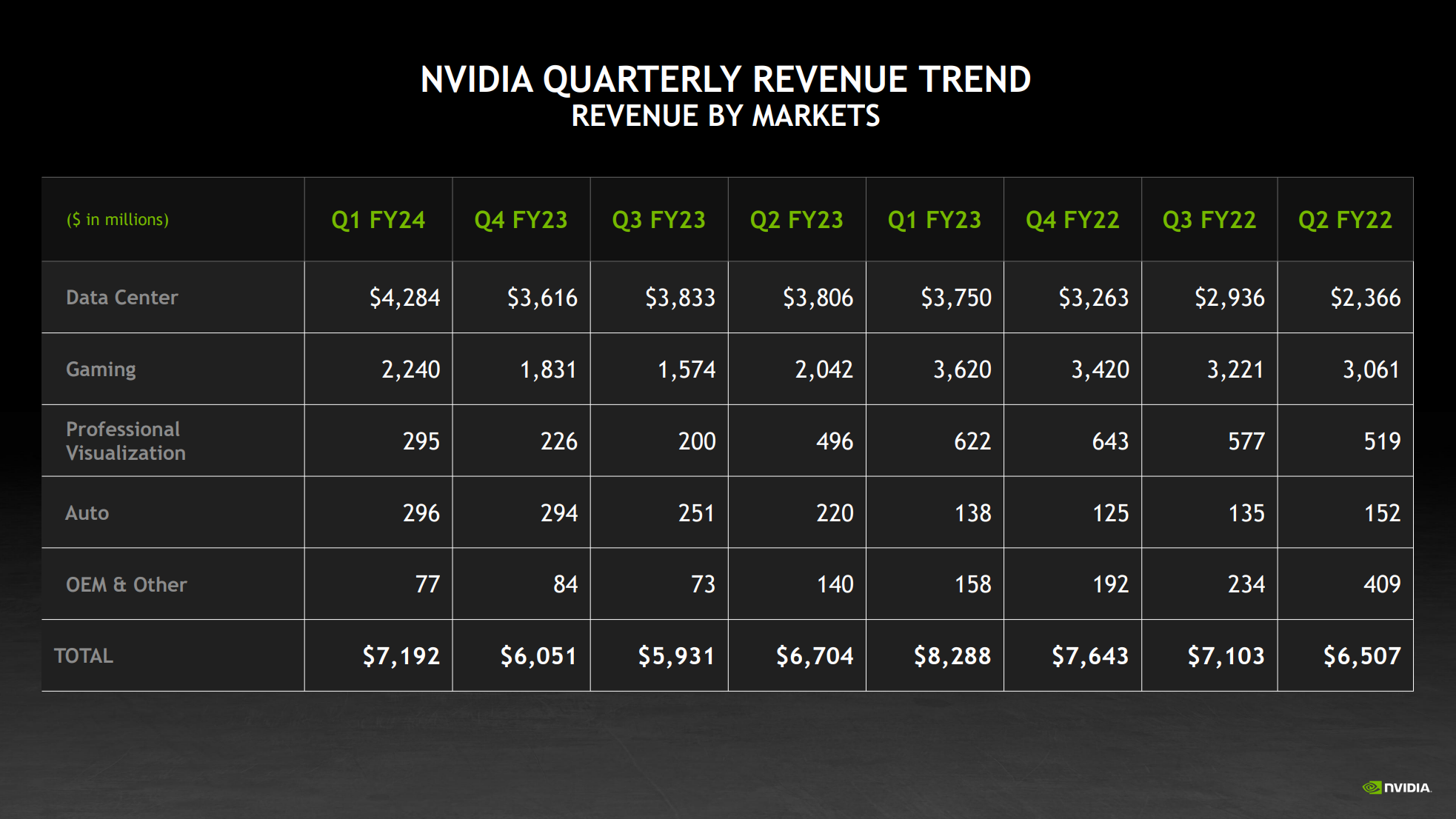

Per the company's latest earnings report, Nvidia's biggest source of income is from the data center sector, not gaming. For many quarters, data center revenue has been double that of gaming (almost triple in one quarter), and it's only increasing while gaming continues to decrease. This is the reverse of Nvidia's historical revenue, as gaming was typically the breadwinner up until 2021, which was the first year that data center revenue overtook gaming revenue.

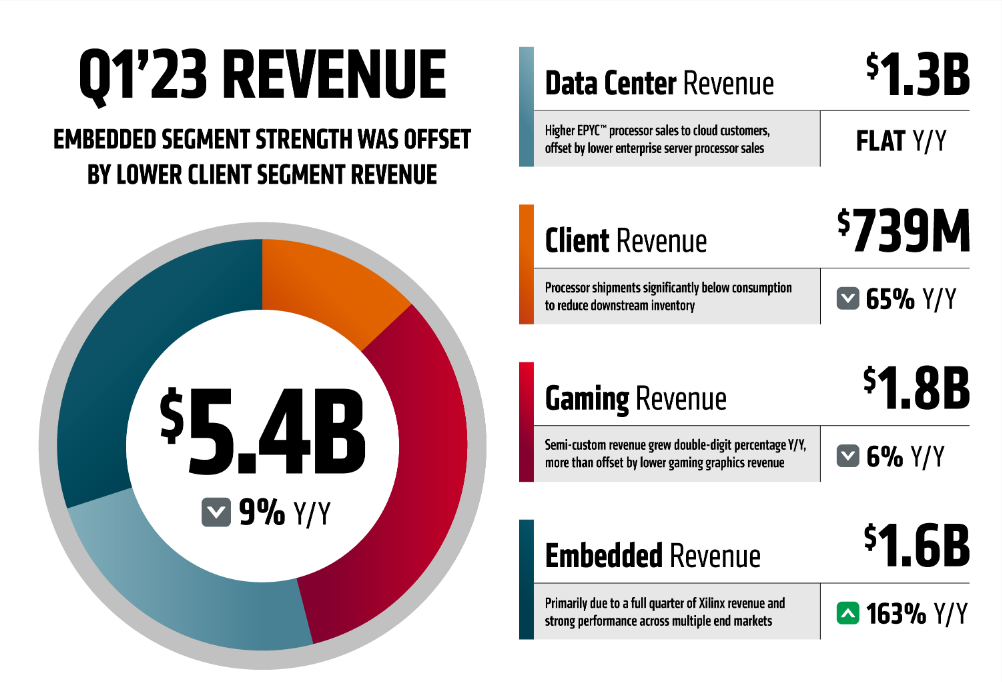

AMD's latest earnings tell a similar story, though its revenue categories are somewhat different. The data center segment includes server CPUs and GPUs; client means Ryzen CPUs; and gaming covers dedicated gaming GPUs. Although it appears that AMD has a big stake in the state of the gaming GPU market based on that reported revenue, it also includes sales of Playstation and Xbox APUs, which massively inflates the gaming segment and obfuscates how many graphics cards it sells.

It's hard to say how much money AMD is making from selling graphics cards to consumers, but even assuming AMD's making just as much money from RX 6000 and 7000 as it is from Ryzen 5000, 6000, and 7000, its data center revenue was $1.3 billion dollars in Q1 2023, almost twice that of Ryzen CPU sales. More realistically, given Radeon's market share is about 10%, I'd estimate it makes no more than $400 million a year.

New opportunities in the data center segment have basically killed efforts for the gaming graphics card, which is why I don't expect things to get back to normal. This is the new normal. The cost of making GPUs isn't going to decrease, and the data center is almost certainly not going to suddenly become less profitable than the gaming graphics card market. I wouldn't even put my hope into Intel, which has the same GPU ambitions as Nvidia and AMD. I hate to say it, but the good old days are behind us. And it sucks.